Project: Saddles in Deep Learning

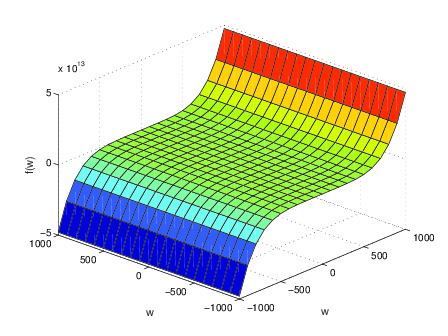

Convergence to local minima is well understood in the non-convex literature. Deep neural networks are also assumed to converge to local minima. The proliferation of Saddle points increase as the dimension increases. In this work we hypothesise that

- All deep networks converge to degenerate saddles

- Good saddles are good enough

The source code for this project could be found here